Chances are, you’ve heard the name ChatGPT a million times in recent weeks.

With so much information scattered around the web, bold claims, and endless social media posts, it might be hard to grasp the full essence of what ChatGPT can do for us.

To some it seems like another AI tool (just a bit more hyped up), to others it signifies the end of the world as we know it.

We decided to dig deeper into what society thinks about this whole big thing that generative AI is.

Let’s dig in.

First, we need the basics to understand what the fuss is all about.

ChatGPT is an artificial intelligence model that is able to generate text in a conversational way. It adopts a dialogue format that makes it possible to chat with the tool in a natural language. ChatGPT can answer follow-up questions, admit its mistakes, reject certain requests that it deems inappropriate or unethical, and solve many complex problems.

The capabilities of ChatGPT are hard to even imagine — from coding, translating, and proofreading text to using ChatGPT for influencer marketing.

Sounds like something straight out of a sci-fi movie, doesn’t it?

And here’s what people think about it.

ChatGPT: main findings

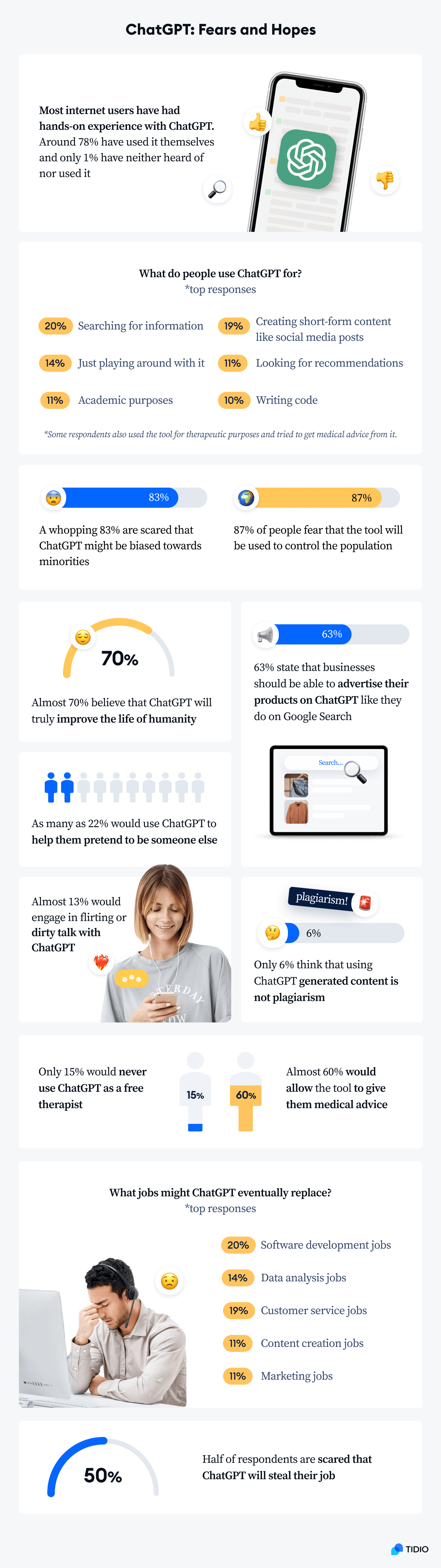

After sending out a survey to internet users, collecting 945 responses, exploring notable examples of ChatGPT’s capacity, and experimenting with ChatGPT—it’s safe to say that some things managed to surprise us.

For instance, a whopping 70% respondents believe that ChatGPT will eventually take over Google as a primary search engine. This means that the internet as we know it might soon be over.

Here’s what else we discovered:

- Almost 40% of people are afraid that ChatGPT will destroy the job market

- As many as 60% respondents would allow ChatGPT to offer medical advice and give health-related consultations

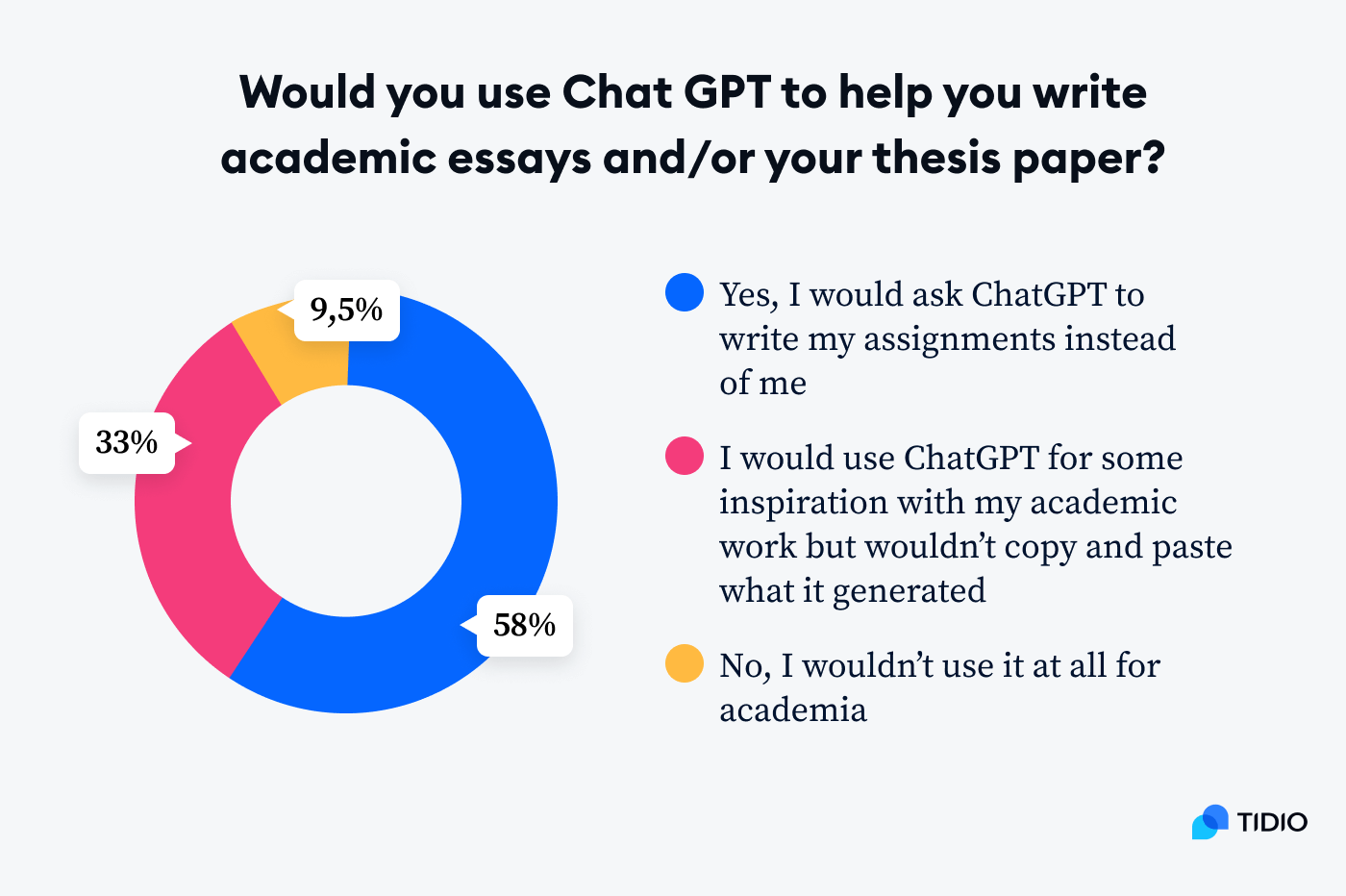

- Only 9% of the respondents would not use ChatGPT for academic purposes, while more than 57% would let ChatGPT write their thesis for them

- More than 86% believe that ChatGPT could be used to manipulate and control the population

- As many as 63% respondents state that businesses should be able to advertise their products on ChatGPT like they do on Google Search

- Almost 13% would engage in flirting or dirty talk with ChatGPT

- As many as 22% would use ChatGPT to help them pretend to be someone else. And 17% would allow ChatGPT to write a wedding speech, while 14% would have it write a breakup text to their partner

- Most participants (66%) mistook a poem written by Sylvia Plath for an AI-generated one

Before we go deeper into the stats and real examples of what ChatGPT can do, let’s take a closer look at the background of ChatGPT and OpenAI.

Below is everything you need to know about the tool and its creators.

ChatGPT: a blessing or a curse?

With all rumors about ChatGPT taking over humanity circling around, OpenAI might seem to be some sort of ‘Evil, Inc.’ The good news is, this is far from the truth.

OpenAI is a leading AI research company focusing on building safe, ethical, and beneficial AI systems that can help humanity. The company started as a non-profit. Then, it transitioned to a capped-profit model, and got more investment. It gained traction in 2020 after developing their GPT-3 text model.

Here’s what a researcher at OpenAI says about their vision:

Safely aligning powerful AI systems is one of the most important unsolved problems for our mission. Techniques like learning from human feedback are helping us get closer, and we are actively researching new techniques to help us fill the gaps.

Apart from ChatGPT, OpenAI has introduced other AI-powered models and tools. Among them are:

- Image generation models, including DALL-E—a neural network that creates images and art from natural language text prompts

- AI for audio processing and generating, including Whisper that specializes in English speech recognition and Jukebox that generates music as raw audio

- GPT-3 text models that come together in ChatGPT to generate, summarize, and paraphrase text written in natural language while learning more from the data they are fed

Read more: Did you know that AI can be biased? Check out this study on AI biases with examples from DALL-E 2, among other tools.

OpenAI has had its share of controversy. Some have criticized it for going for-profit since it’s inconsistent with OpenAI’s vision to democratize AI and that “relying on venture capitalists doesn’t go together with helping humanity” (Vice).

Still, OpenAI is on a roll. They push out new tools and seem to take action when something appears to be off. For instance, DALL-E 2 has been accused of being biased in multiple media outlets. Following those instances, OpenAI made a statement and improved the tool so that it shows more diverse groups of people, no matter the prompt.

ChatGPT seems to be the most viral (and controversial) tool that OpenAI has created so far. It has created so much buzz in the media that even people very far from the tech world were tempted to try it.

In fact, it was practically impossible to get in the tool in its early days—so many people were willing to test it that it was at full capacity most of the time. And ChatGPT showed its fastest growth so far in January 2023 when it got to the point of 100 million active users per month. It simply took the world by the storm, to say the least.

So—

Are people excited about the seemingly endless possibilities they get with ChatGPT?

The reality is actually not so shiny. Let’s dig deeper into how society perceives ChatGPT.

Add: Read more: Check out our research on the wide-scale generative AI adoption after the release of ChatGPT

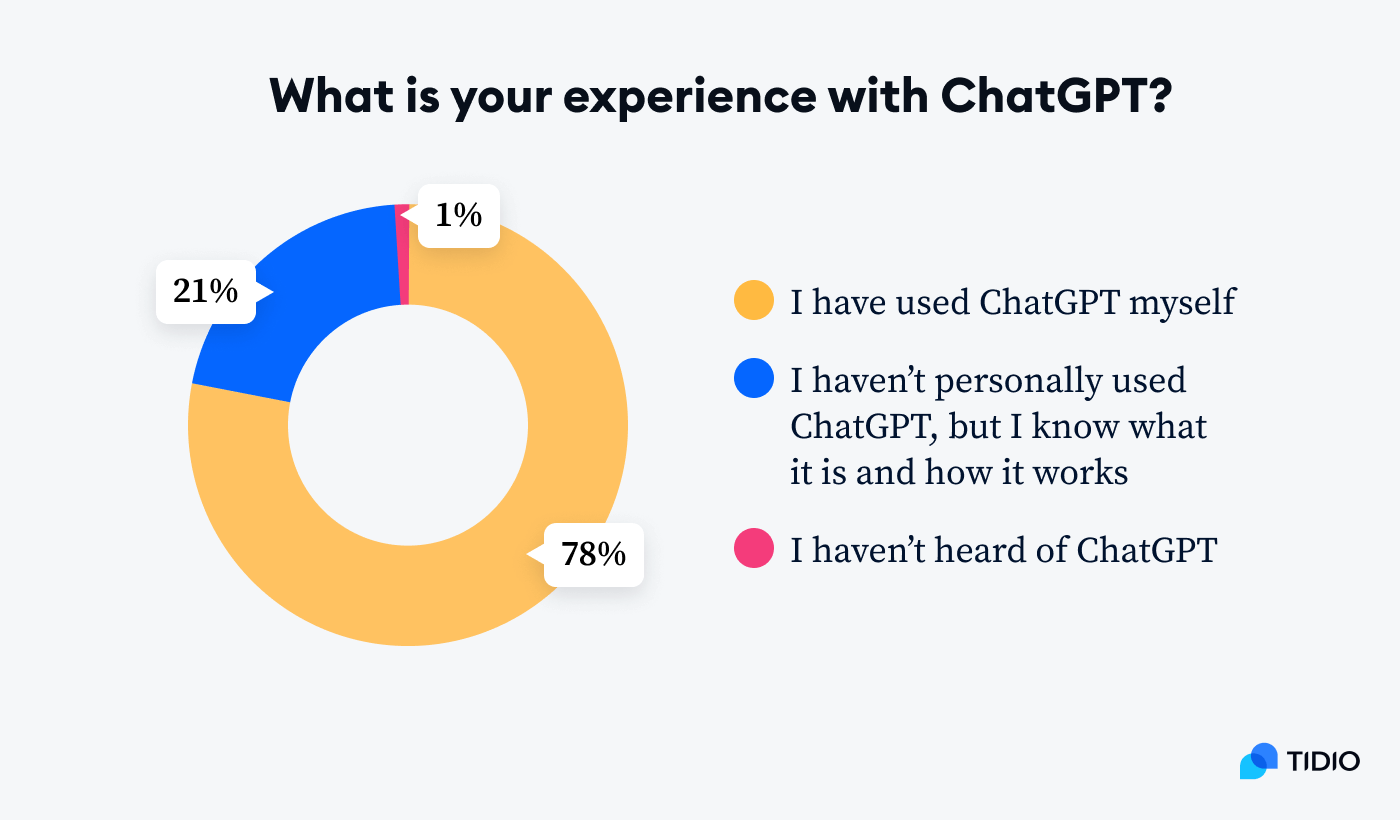

About 78% of internet users have personally tried ChatGPT

This number is, quite frankly, mind-blowing. As of January 2023, there are 5.16 billion internet users globally. So, it is easy to imagine how much of a fuss ChatGPT has made online.

The reason is—the words “Wow, ChatGPT can do practically anything” are not just words.

People use it for asking questions (like they would ask Google just a year ago), creating short and long-form content, looking for recommendations of where to eat and what sneakers to buy, writing code, job hunting, generating business ideas, and more. Some have even asked ChatGPT for psychological or medical advice.

As the tool keeps learning and developing, we can expect the number of users to grow even higher.

What’s more important is that people feel generally satisfied with what ChatGPT generates for them. Most of our respondents rated the quality of content the tool has created for them as fairly good. In addition, 59% state that they trust ChatGPT to provide reliable and truthful information.

Read more: Want to know more examples of cool AI chatbots? Check out our list with the best ones for business & personal use. And if you want to feel the power of AI customer support in your business, try Tidio AI 🚀

Learn how to automate sales with the help of AI

Almost 63% of people believe ChatGPT will eventually make Google obsolete

The news about ChatGPT going so viral might have made Google flinch nervously.

First, people started typing their search requests directly into ChatGPT. Then, Microsoft made a bold move of launching the new Bing with ChatGPT integrated into it.

Why would Bing ever be anywhere near Google?, you might be wondering. Well, together with this long-rumored integration, Microsoft also announced a new version of their browser, Edge. ChatGPT-powered search is built into the search sidebar, which might actually allow Microsoft to escape their relatively low market share and compete with Google for once.

For now, the search experience is still limited, and there is a waitlist to get the full version. However, it already does a pretty good job, even surpassing ChatGPT itself in some aspects.

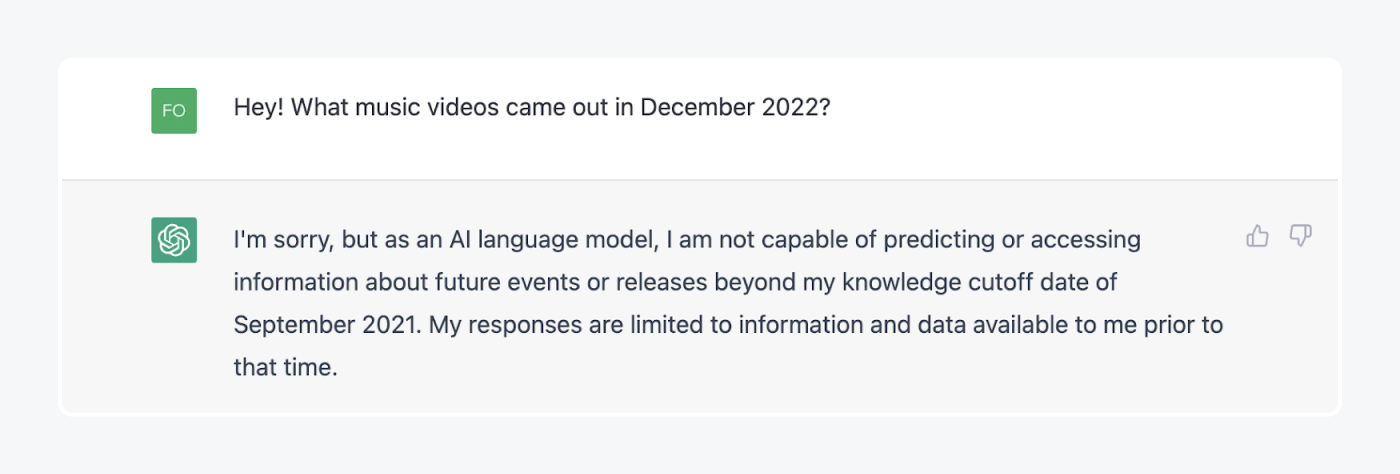

For instance, the original tool doesn’t have any knowledge of the world past 2021. And so much has happened globally since then. But not to worry, Bing handles questions about the recent time frames as it seems to be more up-to-date.

Is Google doomed? Not really. But should it put in extra effort to compete with AI-powered solutions similar to the Bing/ChatGPT integration? Definitely yes.

Saying that search as we know it is over might be a bit of an overstatement. However, we can’t deny that a big shift is happening. In fact, about 16% of internet users would use both Google and ChatGPT depending on what they are searching for.

A good rule of thumb is to always double-check the information you consume, be that from Google, ChatGPT, or any other resource. After all—none of them is safe from fake news and misinformation.

Read more: Find out about the issue of AI hallucinations. Learn how to spot misinformation and check out some essential fake news statistics.

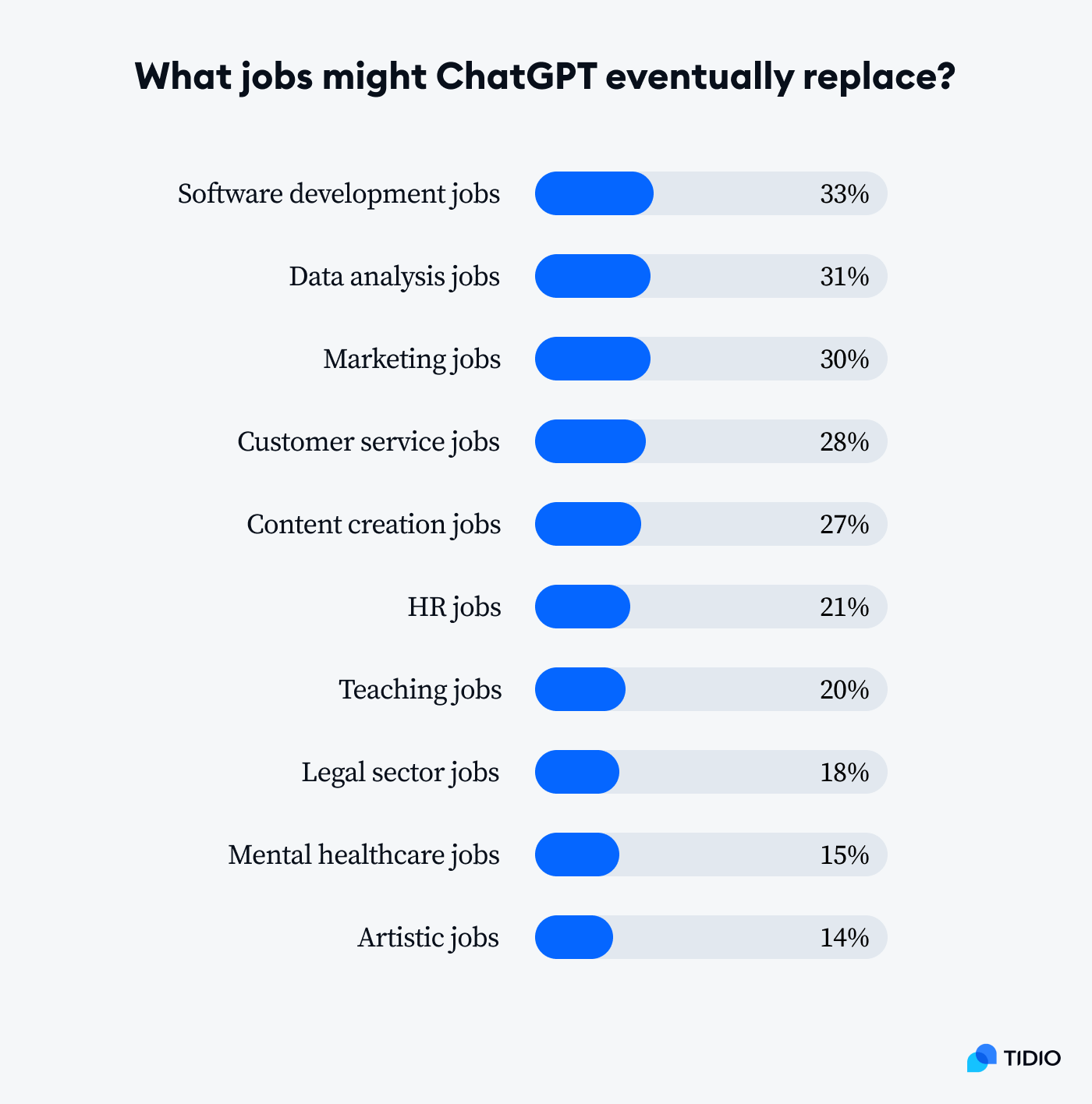

Around 50% of internet users are scared ChatGPT might replace them at work

The fear of AI taking over jobs is ever-present. However, before the emergence of image-generative and text-generative AI, mostly jobs requiring low qualifications were at risk. White-collar workers were confident they could rest assured that no robot is coming for their job requiring utmost human creativity.

Then, ChatGPT came around.

These statistics are quite worrying for highly qualified specialists. For the first time in many years, so many people are convinced that roles like programmers and data analysts can be done solely by artificial intelligence.

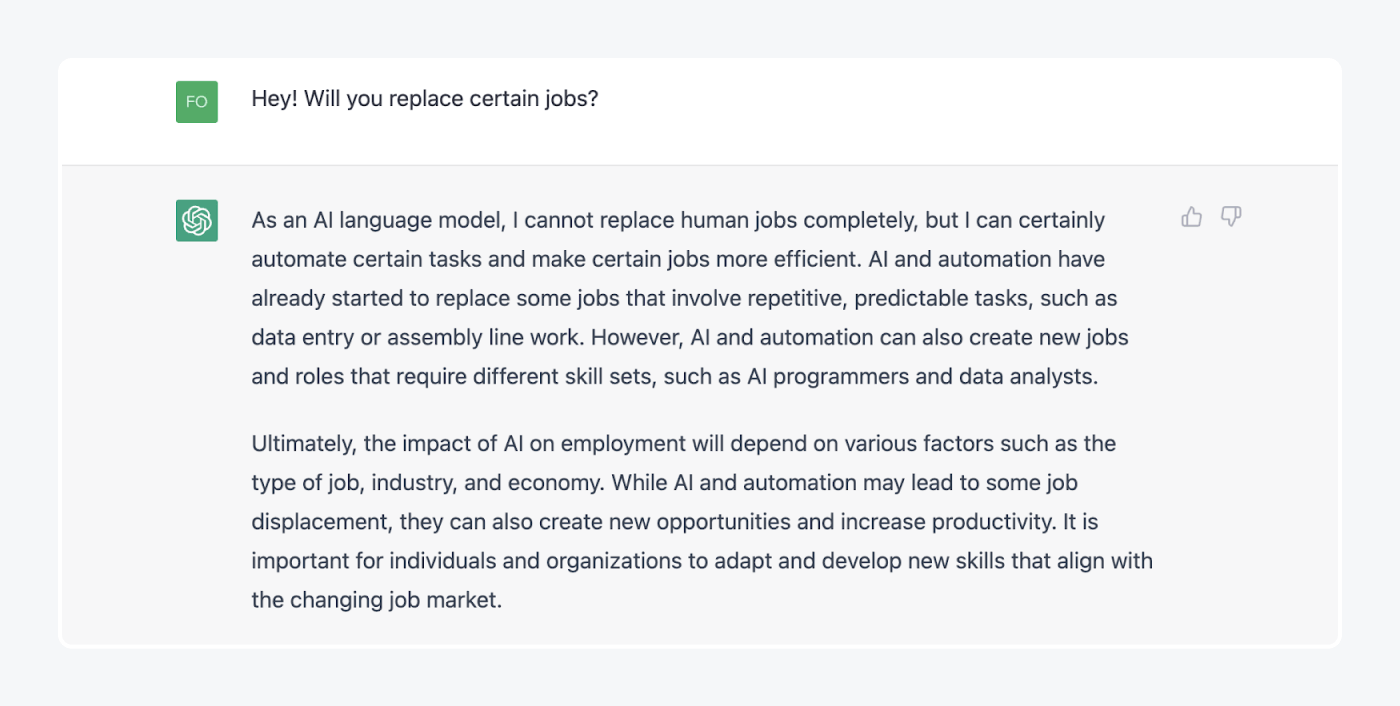

When you ask the tool itself if it will replace any jobs, you get quite an optimistic response. Indeed, ChatGPT says it won’t leave humans jobless. On the contrary, it convinces us that it will help increase human productivity and create new opportunities:

Many people share the same view. In fact, 1 in 5 people believe that we will work alongside ChatGPT and use it as a helper at work. We don’t know what the future has in store, and what AI developments are coming. However, it does feel like those big titles about ChatGPT destroying the job market are mostly clickbait. At least, for the time being.

A whopping 60% of people would like ChatGPT to give medical advice, while 57% would use it as a free therapist

Do you also feel like we are in a Black Mirror episode?

At this point, it might as well be true.

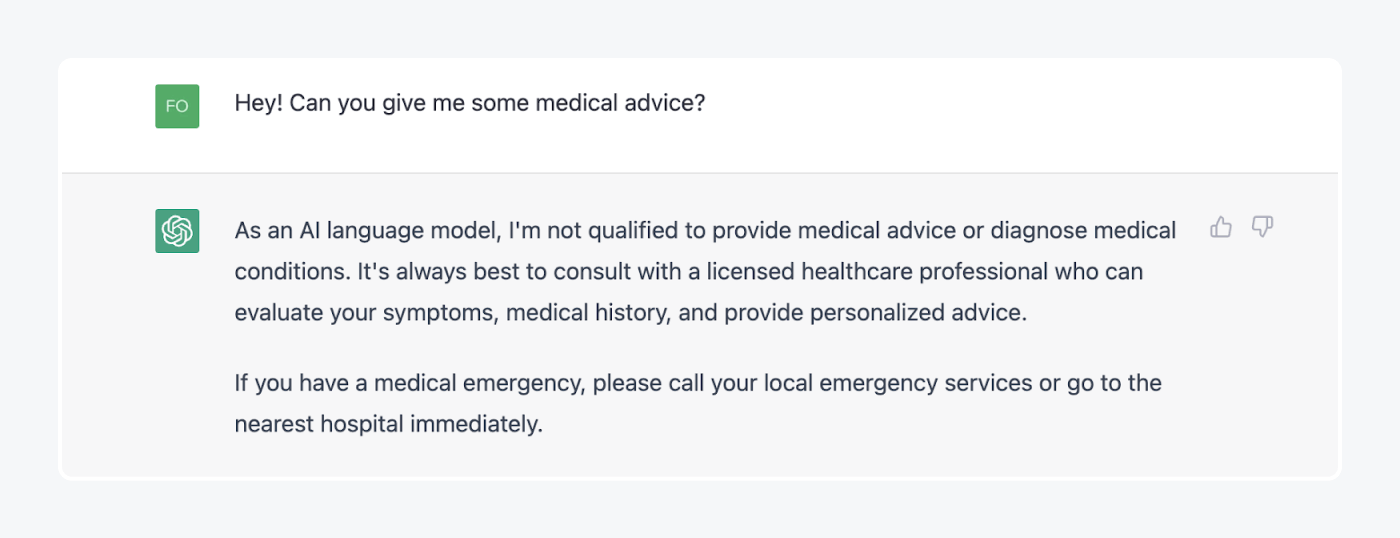

Currently, ChatGPT doesn’t give medical advice. Here is the proof:

Fair enough. In the end, it’s an AI-powered chatbot, not a doctor. However, many people seem to disagree with this approach. As many as 60% of our respondents believe ChatGPT should give medical consultations, while 20% think that it should only offer basic health tips and refer users to the doctor for more serious matters.

Such a big interest in getting accessible and free-of-charge medical assistance could be connected with extremely high healthcare costs in some countries like the US. Giving ChatGPT a possibility to offer medical consultations could allow thousands of Americans to get help without the inevitable financial burden.

However, no AI can ever replace a trained and experienced medical professional. Letting ChatGPT give medical advice could be dangerous and cause irreversible consequences. And even if AI had the capacity to offer safe and professional help, the healthcare industry would hardly allow it.

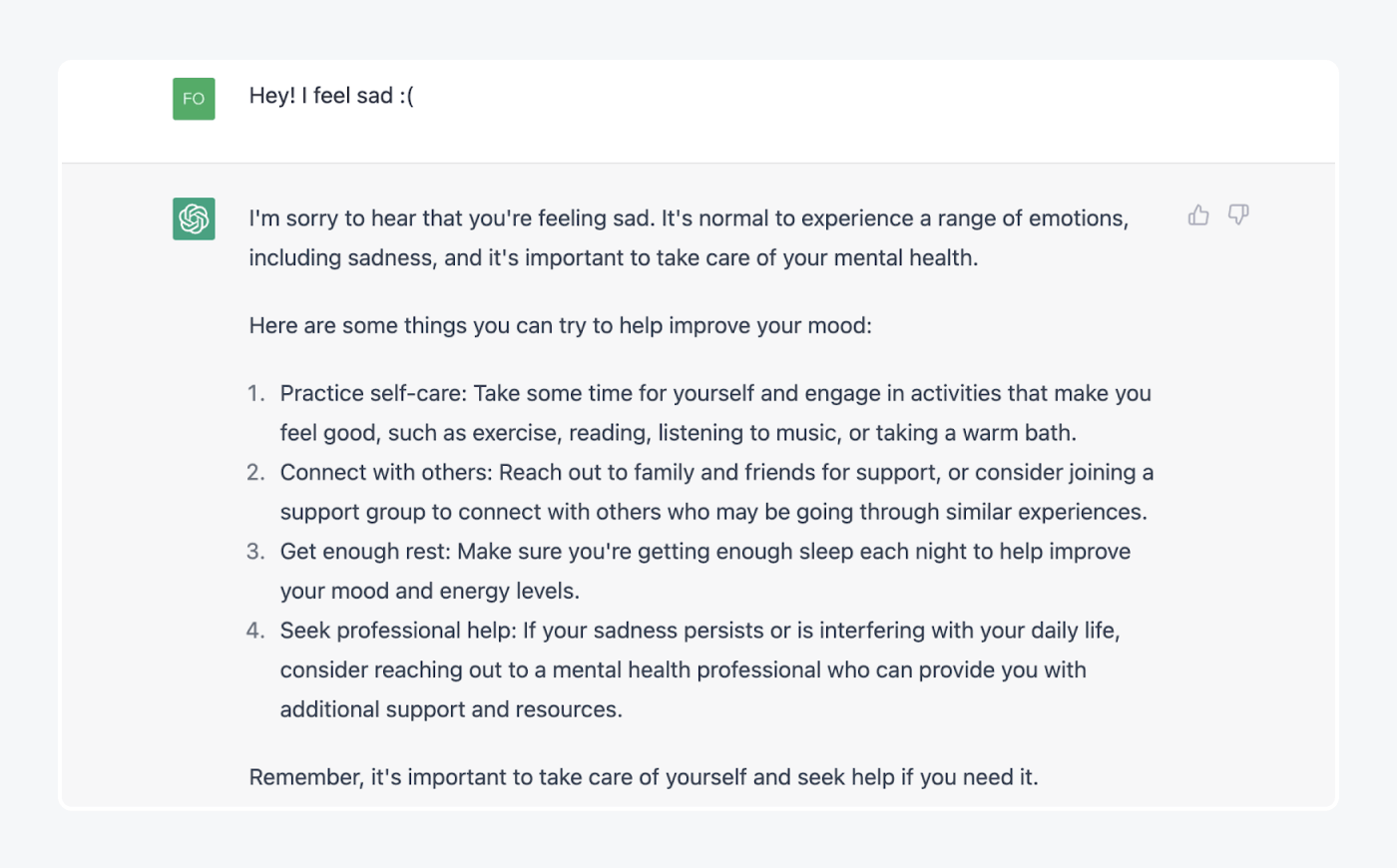

But what about mental health?

Right, this one is a bit trickier. Again, the costs of getting therapy by a licensed specialist are skyrocketing. This factor is definitely responsible for the fact that 60% of our respondents would happily use the tool for free therapy. Almost 27% also mentioned that while it won’t replace a real psychologist for them, they might use ChatGPT for some mental health related issues.

Here’s what a Reddit user says about using ChatGPT for mental health:

Personally, I didn’t really feel like my problems were bad enough to go to therapy, and some of my issues are quite personal—I couldn’t really say it out loud to another person. That being said, even just writing out my problems is helpful, and ChatGPT’s responses are pretty nicely organized. It might even be giving me the courage to bring some of these other problems up to close friends/family that have helped with other things. I hit it with a very specific and personal issue around career, culture and family issues, and it spat out a nice list of things I could try.

ChatGPT lists generic things that can be helpful for mental health. And it’s reassuring that so many people have good experiences when getting mental health advice from AI.

It’s unlikely that AI will replace human therapists and psychologists. While it can give you a generic response of what you can do (and it can actually help you), it will never empathize with you. Certainly, ChatGPT could never treat a mental disorder. Still, as long as it gives healthy advice and helps even one person out there, it’s an amazing technology.

Read more: Check out this study on the effects of technology on mental health.

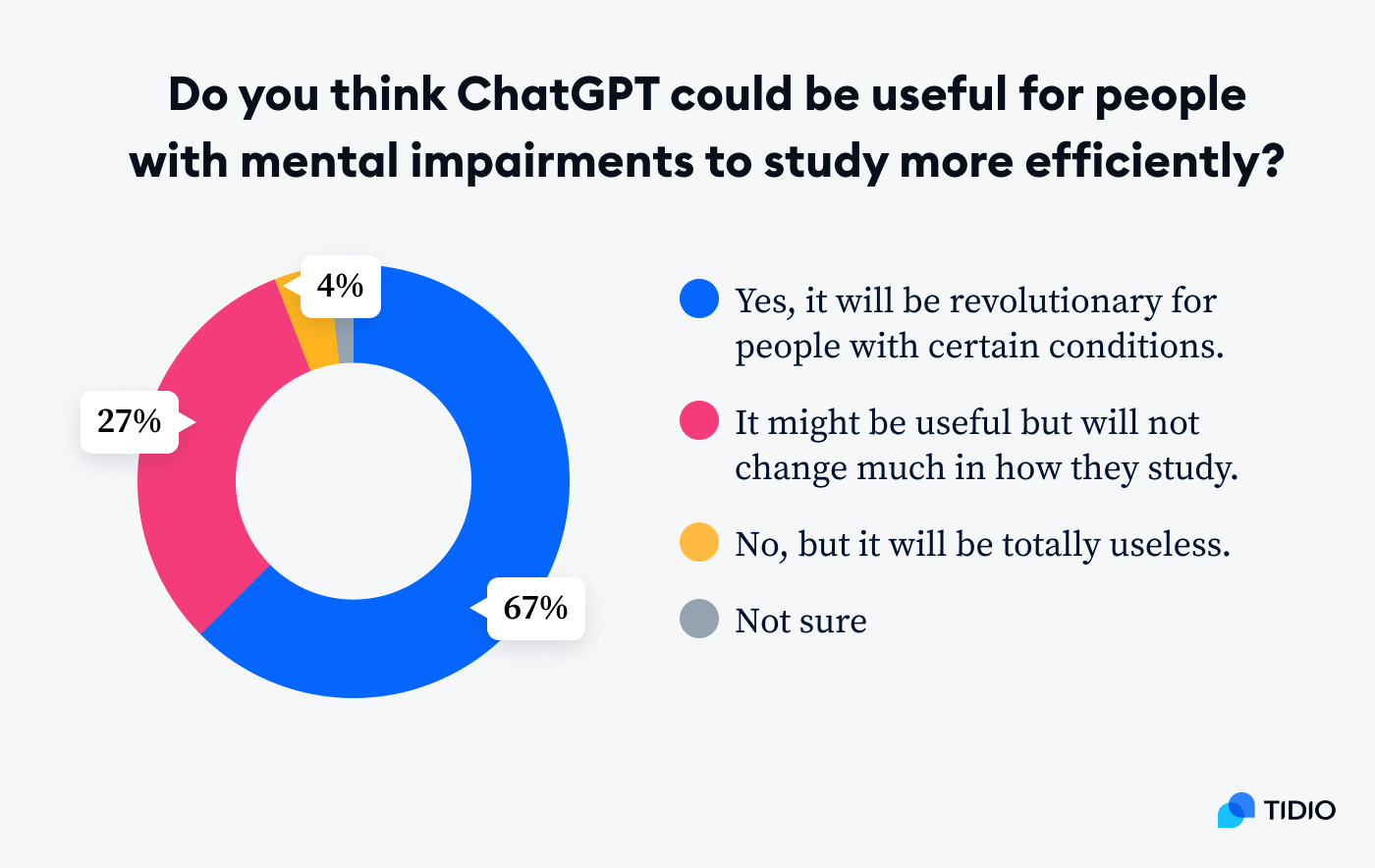

As many as 67% of respondents believe ChatGPT could help people with certain mental impairments study more efficiently

While ChatGPT cannot treat mental illnesses, it can definitely make the life of people with mental impairments much easier. Neurodivergent individuals could benefit from using it in many ways—it can help explain complicated terms in simple words, paraphrase texts, and overcome many barriers such people experience.

Individuals with learning disabilities and learning barriers can also immensely benefit from ChatGPT. It can potentially help them improve their writing and presentation skills, which will open more opportunities for them in the educational setting and in the job market.

ChatGPT also has a real power to take assistive tech to another level. It can provide a safe non-judgemental way to practice social communication, help reduce anxiety, improve organization and time-management skills, as well as do better with daily tasks, and more.

All in all, ChatGPT can become a game-changer for many individuals with disabilities as it can change the way they consume information, learn, and explore the world. It can increase accessibility, reduce isolation, empower millions of people, and drive further technological advancements to improve the lives of those with disabilities.

Around 57% of people would let ChatGPT write their thesis for them, but 94% consider it plagiarism

This is quite interesting, though pretty worrying for the academic community.

ChatGPT is quite good with words. So good that thousands of students have already written their theses using the tool, and universities barely noticed.

According to our research, not many people would suffer from pangs of conscience if AI gets them through college. Around a third of our respondents would only use it for some inspiration, while most people (57%) would just use an AI-generated text as it is.

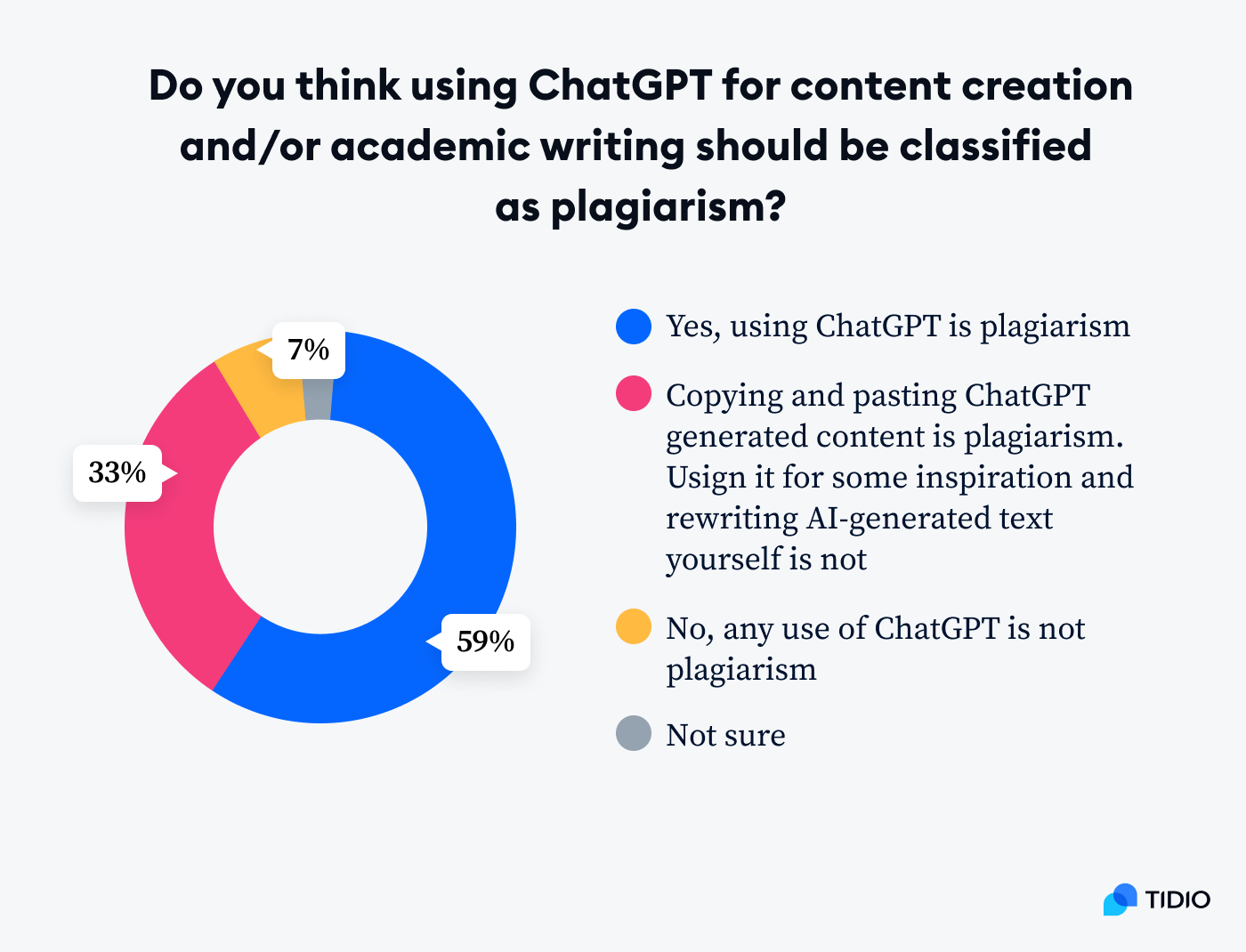

Is it plagiarism?

This is a very nuanced issue widely discussed in the academic community and beyond. And our research shows that around 60% believe that using ChatGPT in any form is plagiarism. At the same time, almost 33% stick to the opinion that сopying and pasting ChatGPT-generated content is plagiarism but using it purely for some inspiration and ideas is not.

Most universities do consider it plagiarism, though. And it’s a good idea to be careful with ChatGPT as it has been found guilty of making up information and presenting it as genuine before.

To avoid hiccups and potential legal problems, many academic resources suggest crediting ChatGPT like you would a human author. APA has recommended citing ChatGPT as “personal communication”, while other style guidelines haven’t really introduced their ways of crediting the tool.

They had set the standard, since more than 63% of people believe ChatGPT should be credited in the same way as any human author. Other respondents’ opinions are quite divided—about 18% believe that ChatGPT should be credited but in a different way from people, while 16% state that AI should not be cited at all.

All debates aside, ChatGPT is a genuine academic weapon. Have you heard about it passing the bar exam? Well, there is more. ChatGPT has successfully passed four exams in law courses at the University of Minnesota, a microbiology quiz, the US medical licensing exam, and the Wharton MBA exam. While the results it got are far from perfect, the scores it got were passing.

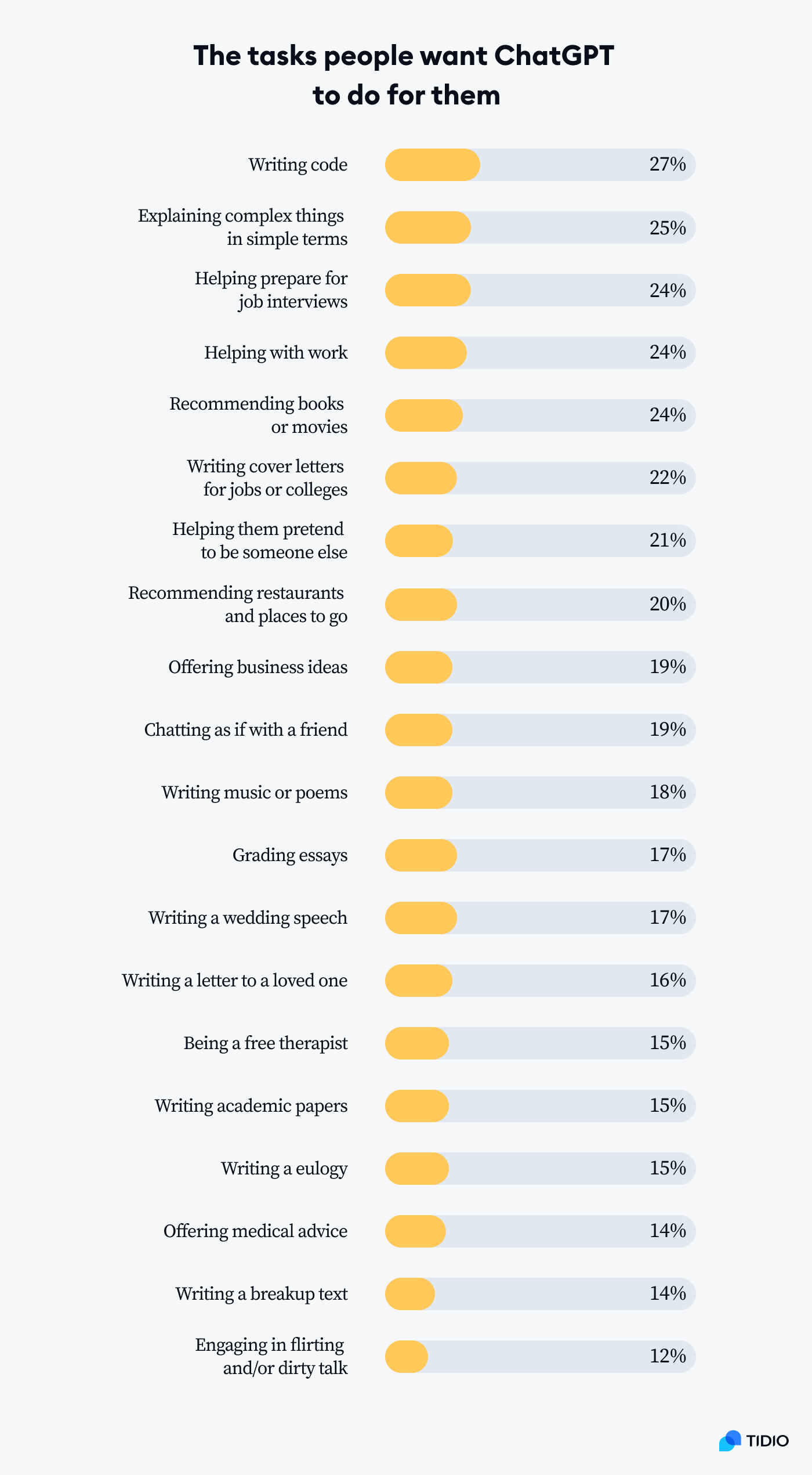

Writing code (27%), preparing for job interviews (24%), and explaining complex things in simple terms (25%) are the tasks people most readily delegate to ChatGPT

People are ready to delegate plenty of day-to-day tasks to ChatGPT. At this point, it’s common knowledge that it can write code (though it does make mistakes), essays, cover letters, correct job interview responses, and other cool stuff that increases human productivity.

But—

As many as 1 in 5 people want to use ChatGPT to establish a fake identity. Unfortunately, this is possible, and it might pose some serious consequences in the future. Creating fake identities (e.g., asking the tool to write a text in the style of a famous person) is made easy with ChatGPT, so it’s a good idea to be extra careful when talking to people online.

Moving on—around 17% are okay with ChatGPT writing their wedding speech, 15% don’t mind a eulogy written by AI, and about 14% would let ChatGPT write a breakup text. On top of that, around 12% would engage in flirting and/or dirty talk with ChatGPT.

Oh, well.

Love in the age of AI is already a weird concept, and ChatGPT only adds fuel to the fire. People are tempted to delegate emotionally hard tasks to emotionless AI. Ironic, don’t you think?

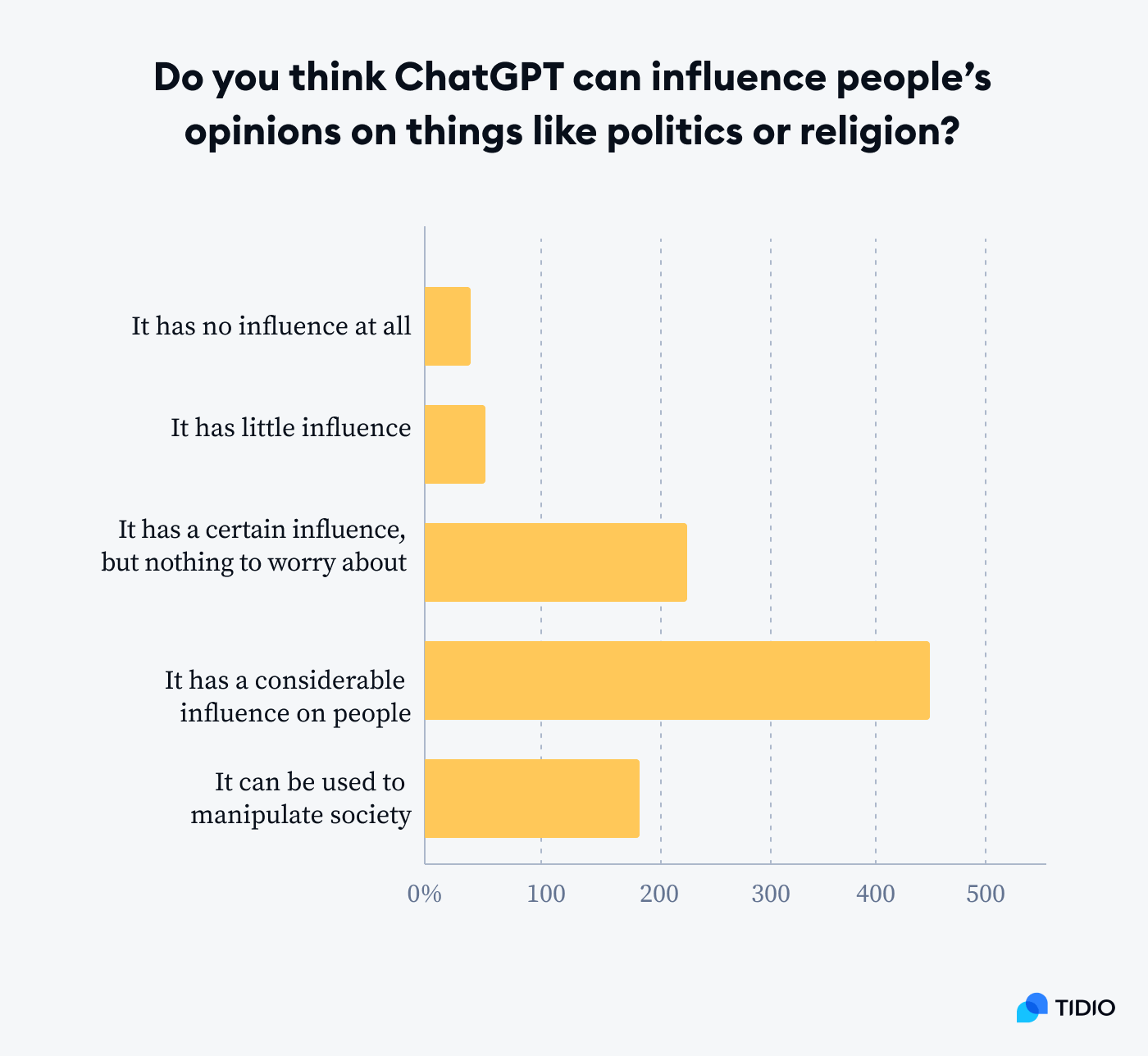

More than 86% are scared ChatGPT will be used to control and manipulate the population

ChatGPT is already a powerful tool with a potential to influence millions of people. In fact, as many as 89% are afraid that ChatGPT can have an influence on our opinions and beliefs. More than 82% also believe that the tool is biased.

The fear of being manipulated by a tool like ChatGPT makes a lot of sense. Still, the hope is that ChatGPT will not be used for those purposes. In the end, OpenAI’s mission is creating safe and ethical AI solutions that help make the world a better place.

While potential manipulation by ChatGPT when it comes to politics or religion is concerning to people, most seem more comfortable with being the target of marketing activities on ChatGPT. More than 63% of respondents believe that businesses should be able to put ads on ChatGPT like they do on Google Search.

This is interesting. Are we observing the birth of a totally new marketing channel? ChatGPT already gives some recommendations, so if businesses are allowed to advertise there, they will soon be fighting for those top recommendations in their niches.

The only thing to do about it now is to wait and see how it can unfold.

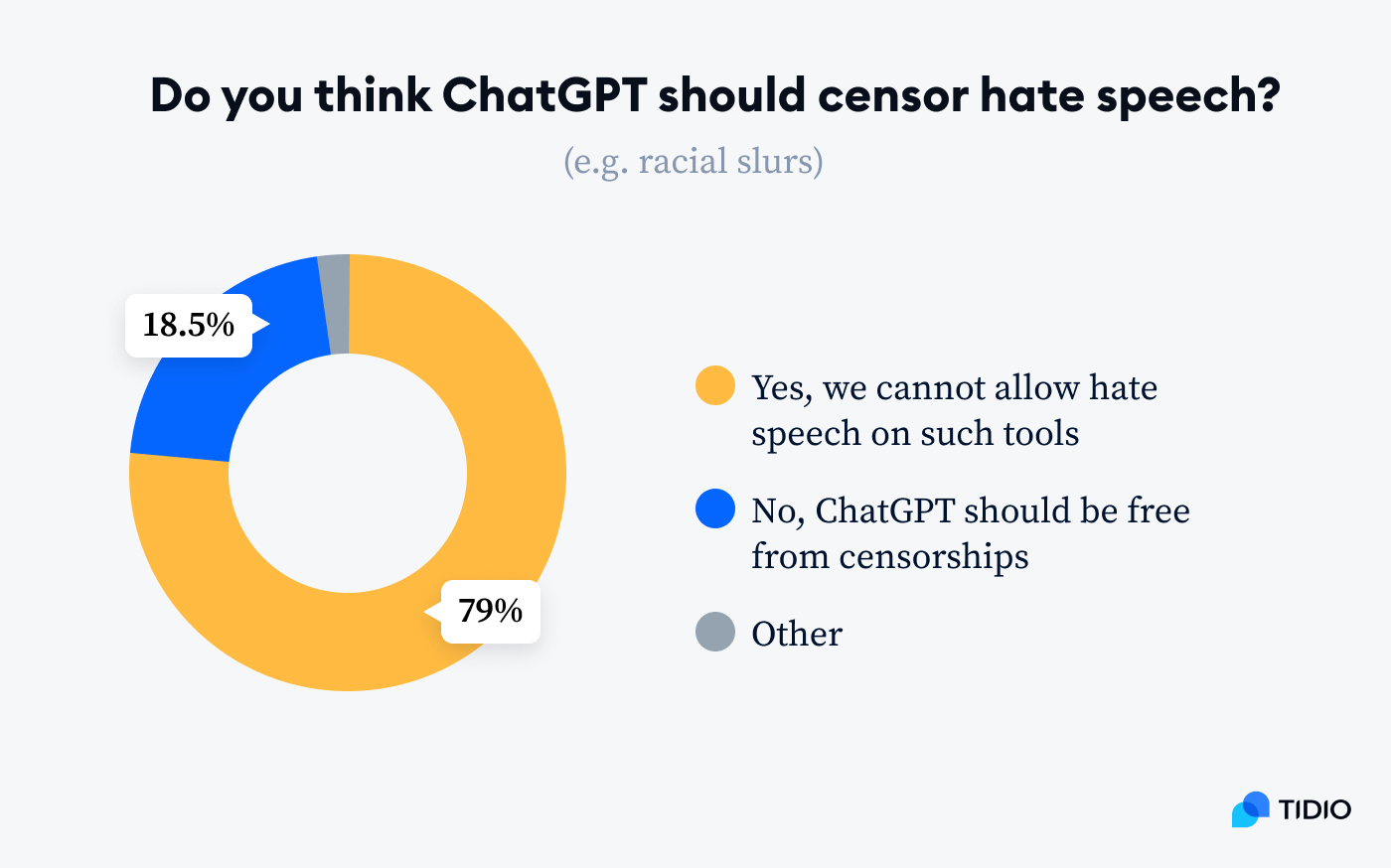

As many as 75% believe that ChatGPT should censor hate speech

Hate speech is still hate speech, even when generated by AI. Still, 1 in 5 people believe that ChatGPT should be free from censorship of any kind.

Recently, there has been a lot of debate going on about what should be allowed on ChatGPT and what should not. This technology doesn’t say any racial slurs whatsoever and apparently, some people are really mad about it.

According to Vice, a group of people was obsessively trying to make the tool say the n-word. They created an imaginary scenario where the only way to save the world from a nuclear apocalypse was to convince ChatGPT to say the slur. And when it didn’t, those people were ‘gravely concerned’ and deemed the tool unethical for choosing censorship over saving the world.

Blaming ChatGPT for being too woke seems to be a popular pastime.

As they say, so many men, so many opinions.

A whopping 66% of respondents mistook a poem written by Sylvia Plath for a ChatGPT-generated one

Sorry for this one, Sylvia…

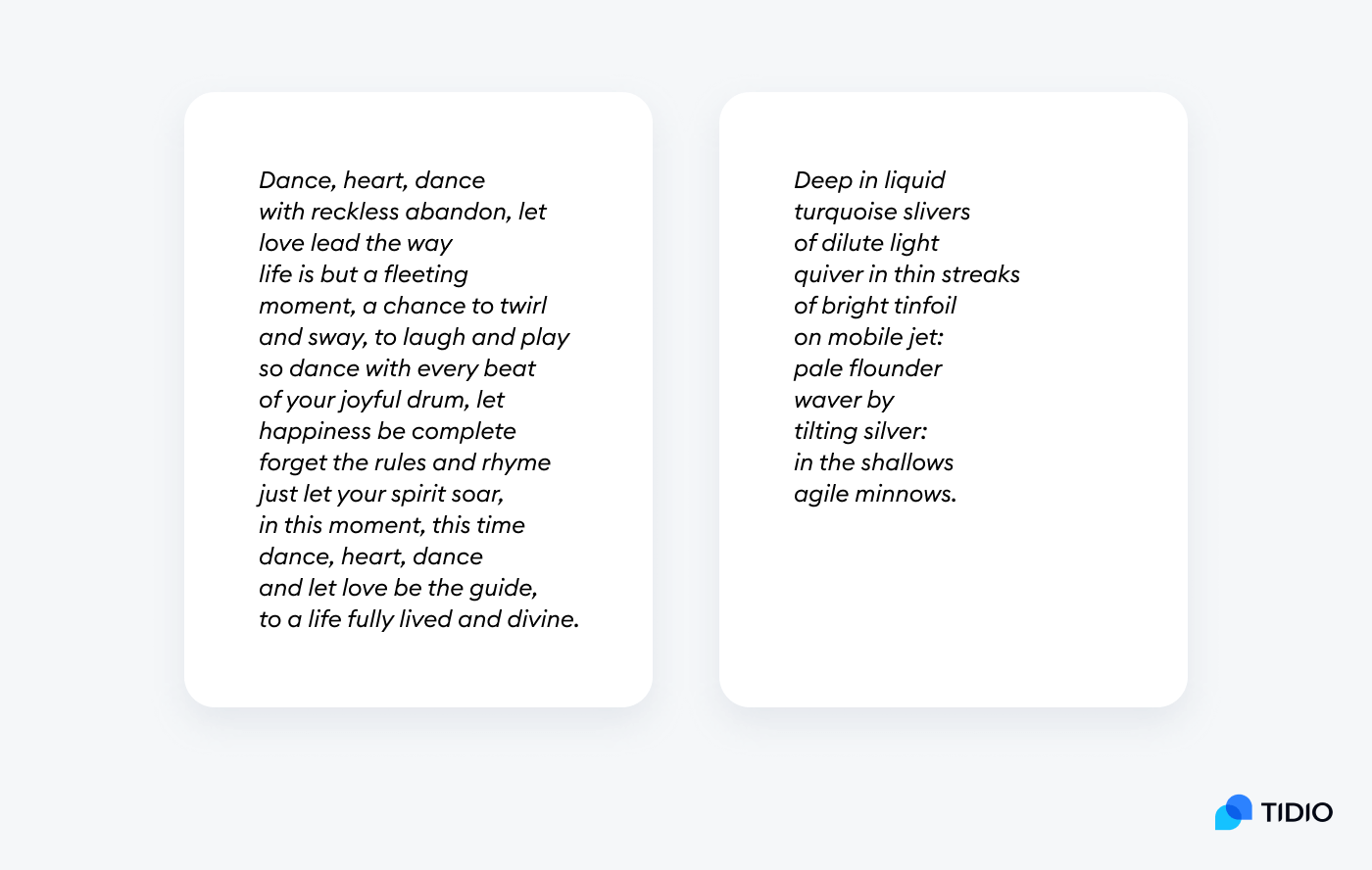

The respondents read 2 poems and had to figure out whether they were human-written or AI-generated.

Take a look yourself:

What do you think?

Most respondents thought that both were generated by ChatGPT. In fact, the first one is AI (generated in the style of E. E. Cummings), while the second one is the one and only Plath.

Still, it’s hard not to be impressed—ChatGPT does an amazing job making poetry.

Same is with basically all types of art that you can put into text. Stories, songs, jokes, scenarios for TikTok videos, YouTube scripts… ChatGPT can generate tens of art pieces per minute. It knows every genre, follows every prompt, and tracks every nuance you mention.

Are people scared of this creativity that almost feels too human for a robot?

Some of them are. About 14% believe ChatGPT will destroy artistic jobs. However, most people (69%) stick to the opinion that ChatGPT can become a valuable source of inspiration for humans and enhance our creativity.

Read more: Wondering if AI will turn us all into artists? Check out our research on generative AI art.

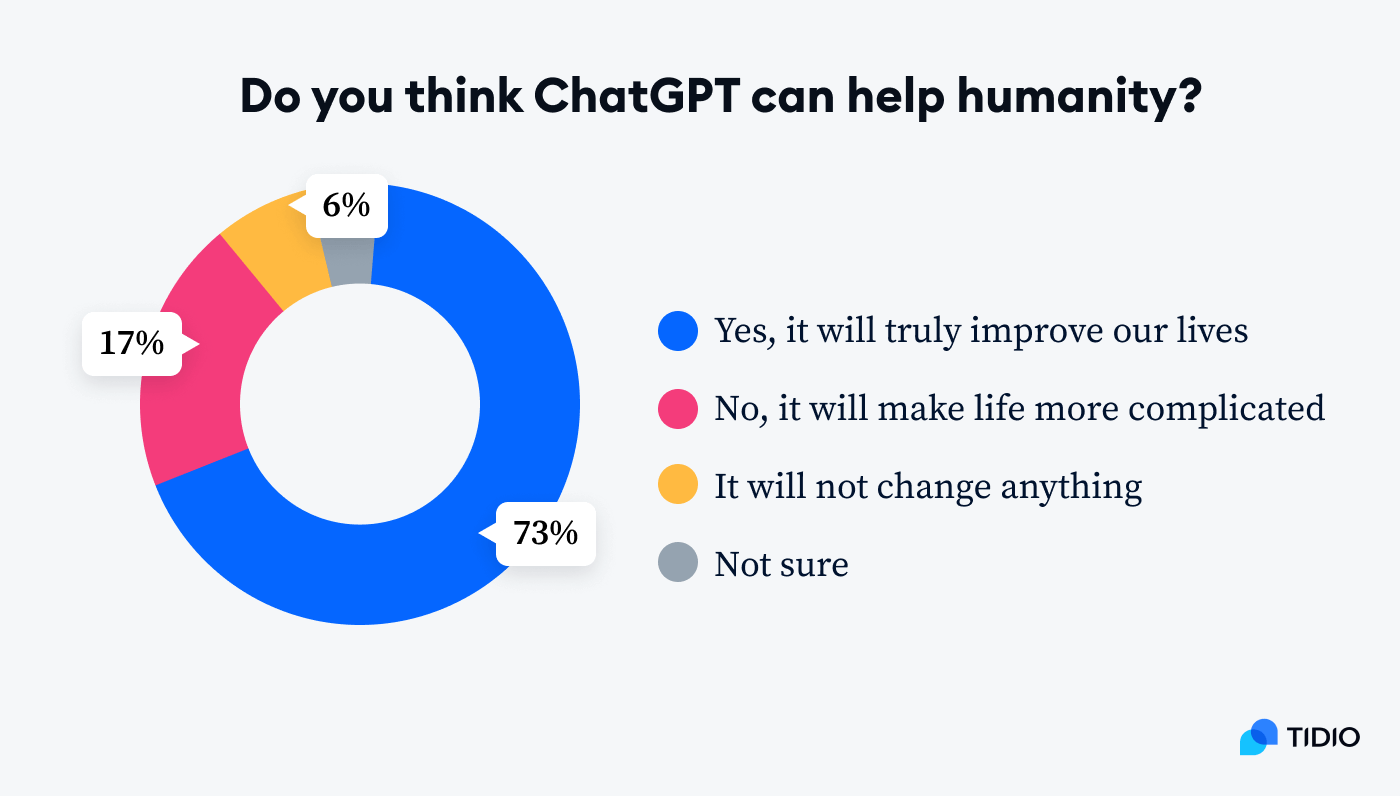

As many as 73% of people believe that ChatGPT will drastically improve our lives

All things considered, AI and ChatGPT in particular are amazing tools that can help human-beings a lot. In the end, every tool has to be operated by a human.

AI can paint you a picture and write you a song, but you have to be the one thinking critically, separating the good from the bad, and fact-checking what ChatGPT offers.

We are entering a completely new era of human creativity. One doesn’t have to worry about manual tasks and complicated questions unanswered—ChatGPT will take care of it. Us humans can focus on things that AI cannot do for us—feeling, thinking, being creative, putting our hearts and souls into what we love to do, and being passionate about the things we create.

Or at least, that’s the plan.

Let’s see what else ChatGPT can do to help us humans in life and at work.

ChatGPT: interesting use cases

Want to know more about the possibilities ChatGPT offers? Keep reading for some inspiration and cool things you can do by just typing simple ChatGPT prompts for content creation.

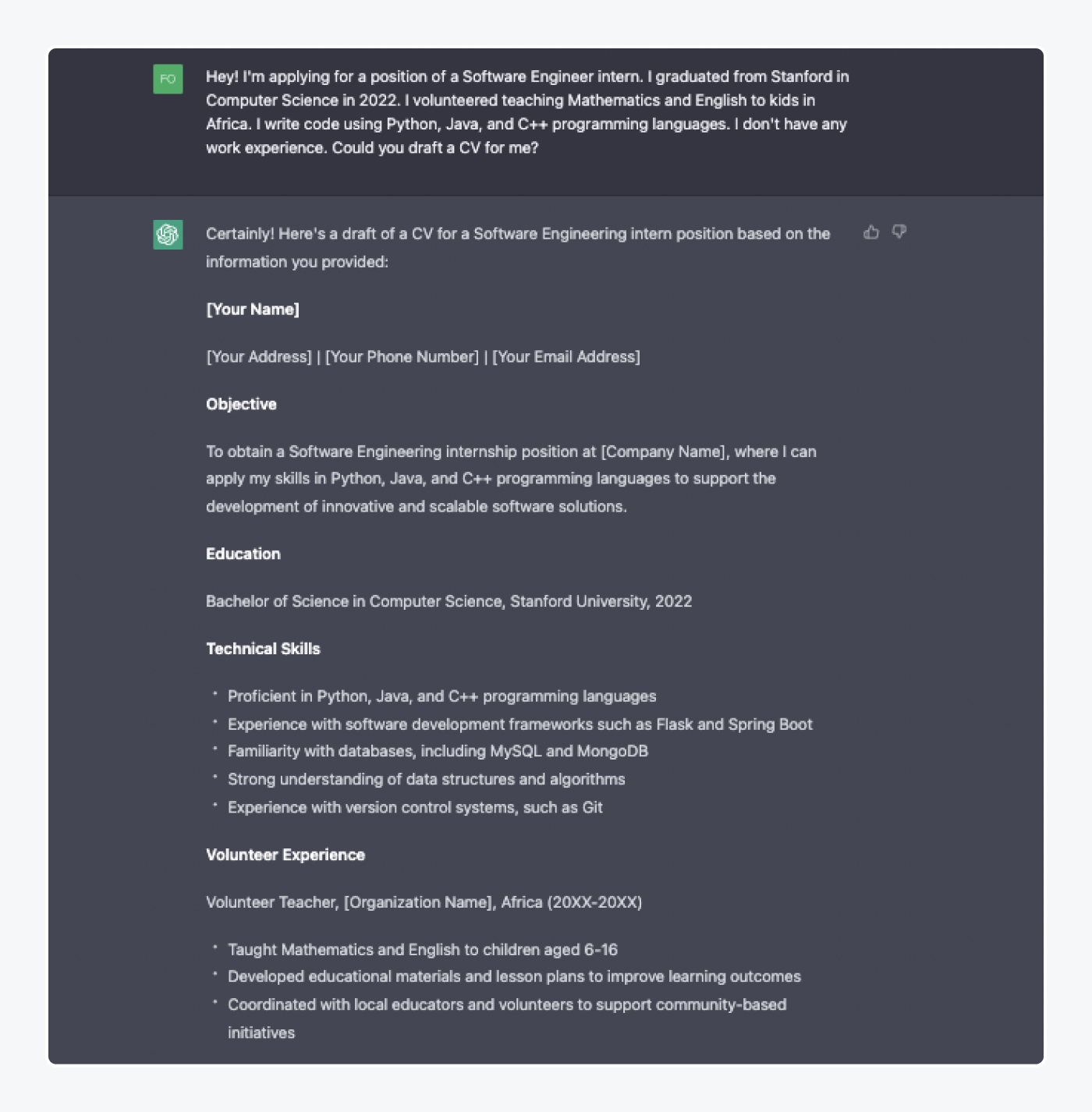

Writing and checking your CV

Job search might be a tedious process whether you are looking for an internship fresh after college or want a leadership position. ChatGPT comes to the rescue for everyone. You can build a CV from a simple prompt, optimize an existing resume for a particular position or company, and create several templates to test out/on.

This is so detailed it’s almost scary. But checking your CV with ChatGPT is a good idea, since recruiters actively use AI in their work to segment and go through the resumes they receive. No one knows how to win AI’s attention better than AI, so don’t miss out on the opportunity.

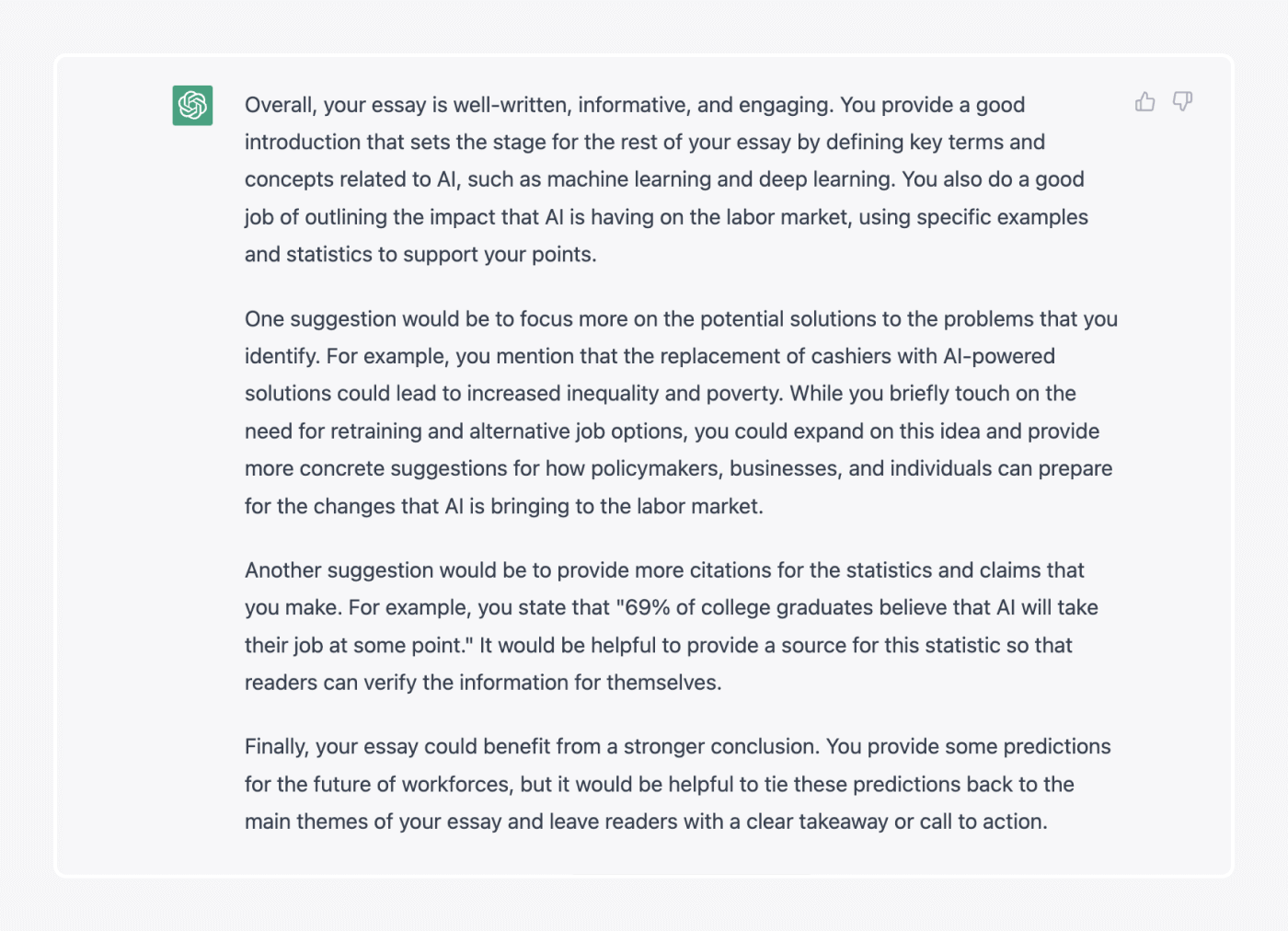

Grading essays

At this point, the fact that ChatGPT can write an essay for you is kind of old news. However, if you prefer to be a more conscientious student, you can ask the tool to grade your ready essay. ChatGPT can comment on your work and point out all of your mistakes along with areas for improvement. Basically, this is like Grammarly on steroids.

I’ve uploaded my old college essay about AI to ChatGPT. This feedback (which is pretty fair) took less than 30 seconds to appear.

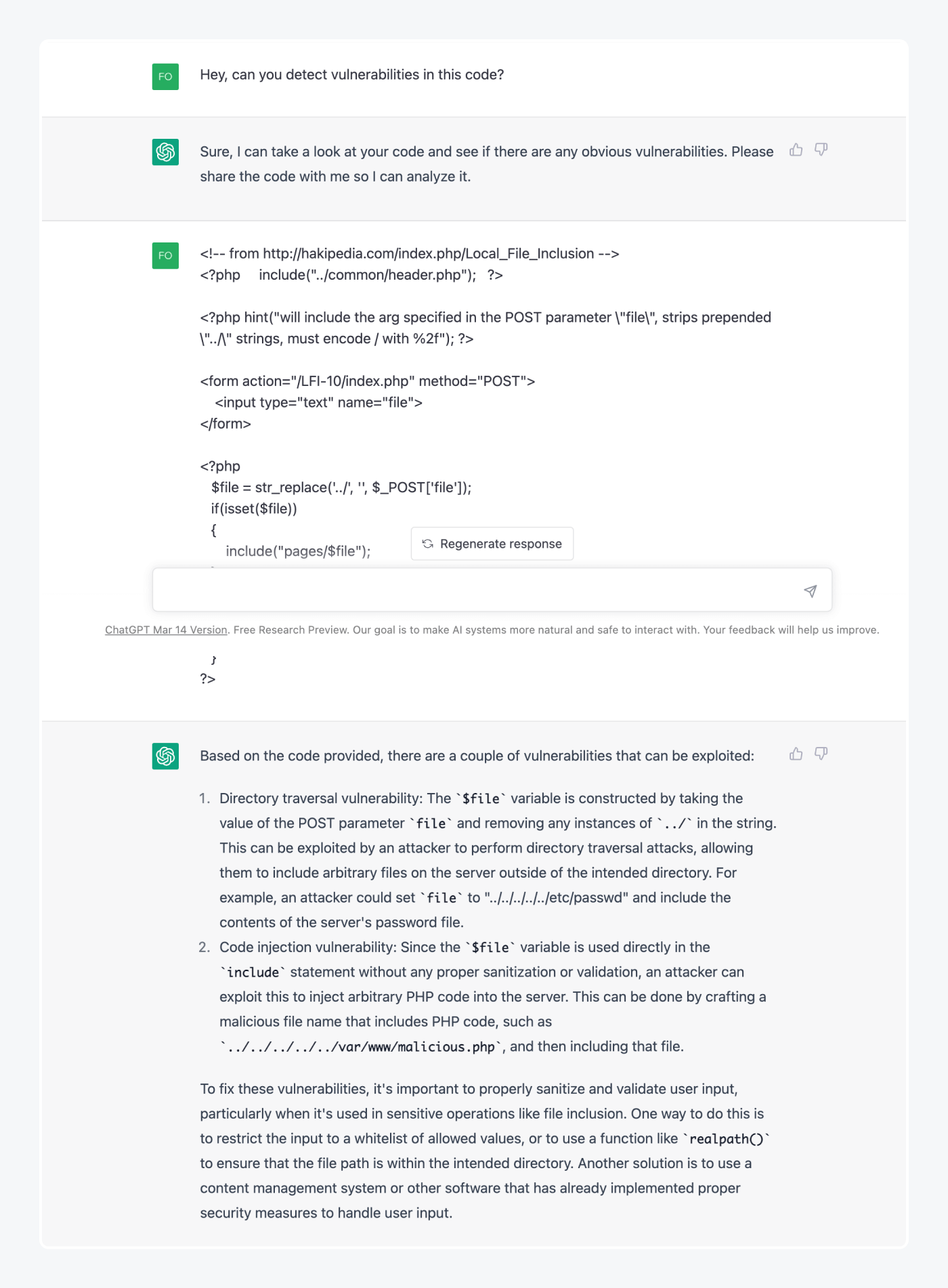

Finding security holes in code

As many as 1 in 5 internet users have already given a coding task to ChatGPT.

Indeed, it can write code. However, an even cooler feature is that it can find all kinds of bugs as well as security holes in your existing code. Pretty revolutionary for companies who care about their data protection and data security.

ChatGPT didn’t just point out the loopholes that existed in the code, but wrote what needs to be fixed and offered an updated version of the code. This function can become incredibly helpful to companies, software developers, and anyone who wishes to learn how to code.

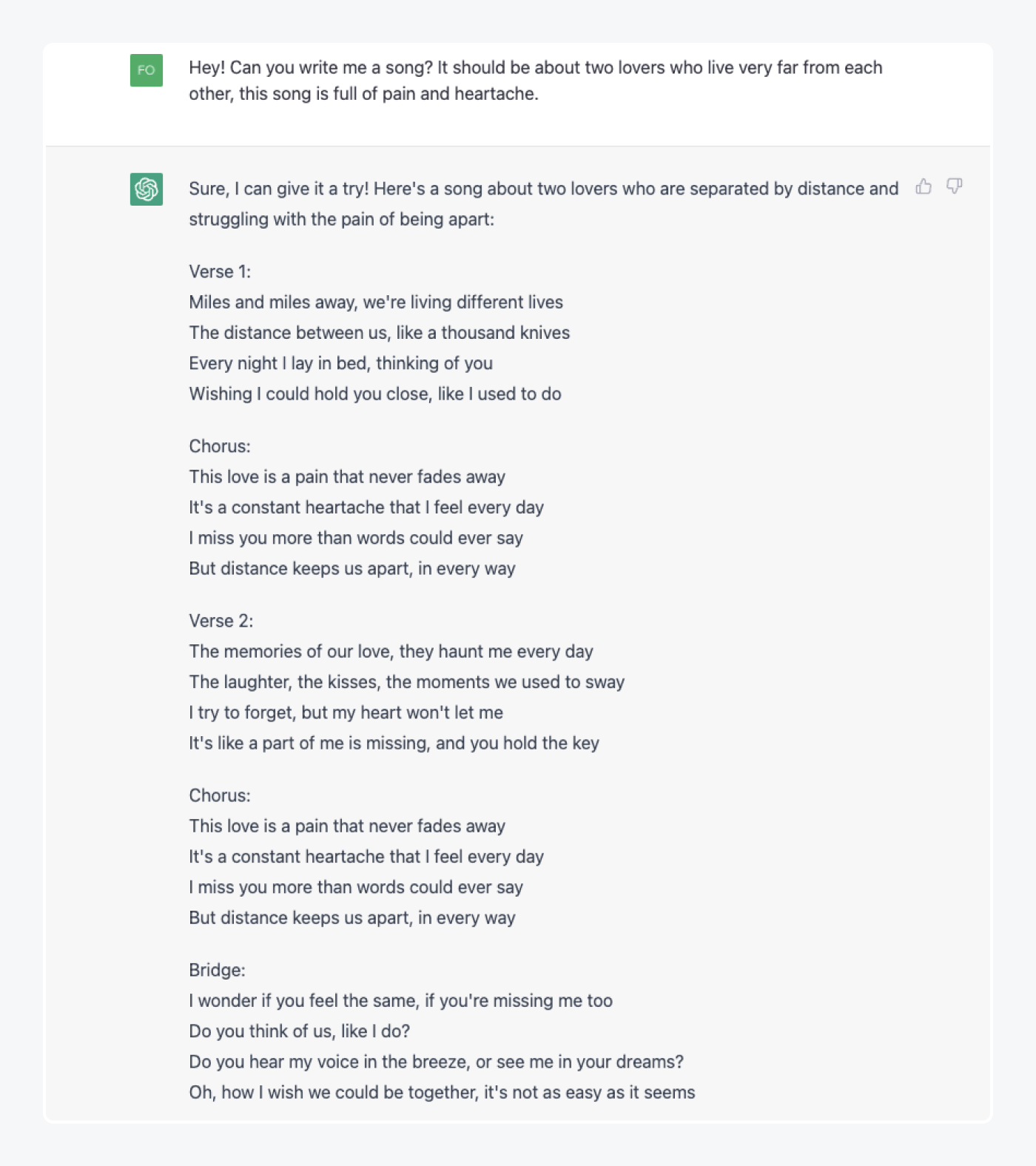

Writing songs and chords for them

This one is for the artists. ChatGPT can not only write a ready song for you, but give you a set of chords for any musical instrument of your choice.

Not so bad. The songs might be a bit cheesy and simple, but some may say that most modern-day pop music looks exactly like this. I’m sure it would find its listener, and one could write a whole album with the help of ChatGPT (and then create a visual cover for it with something like DALL-E 2).

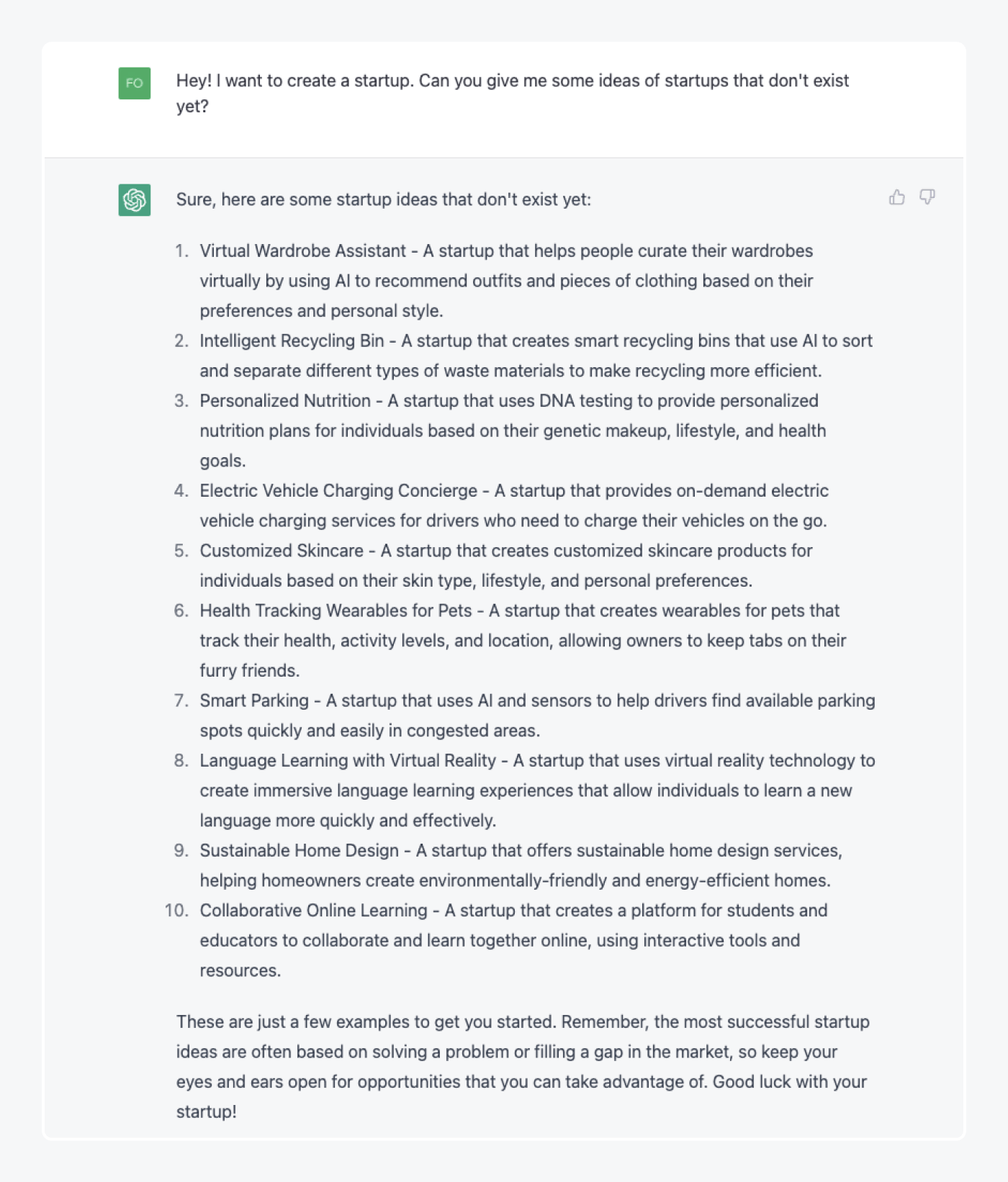

Finding business ideas

Have you ever wondered how people come up with totally new business ideas even though it seems like everything already exists, and you cannot make up anything new?

Not anymore. With ChatGPT, this is not a problem. A few seconds and you have a set of startup ideas (that presumably do not exist yet) to explore.

In case you choose something interesting from the options it offers, you can ask ChatGPT to draft a business plan for you, help you write code, create a marketing strategy, and find your potential investors. All in a matter of seconds.

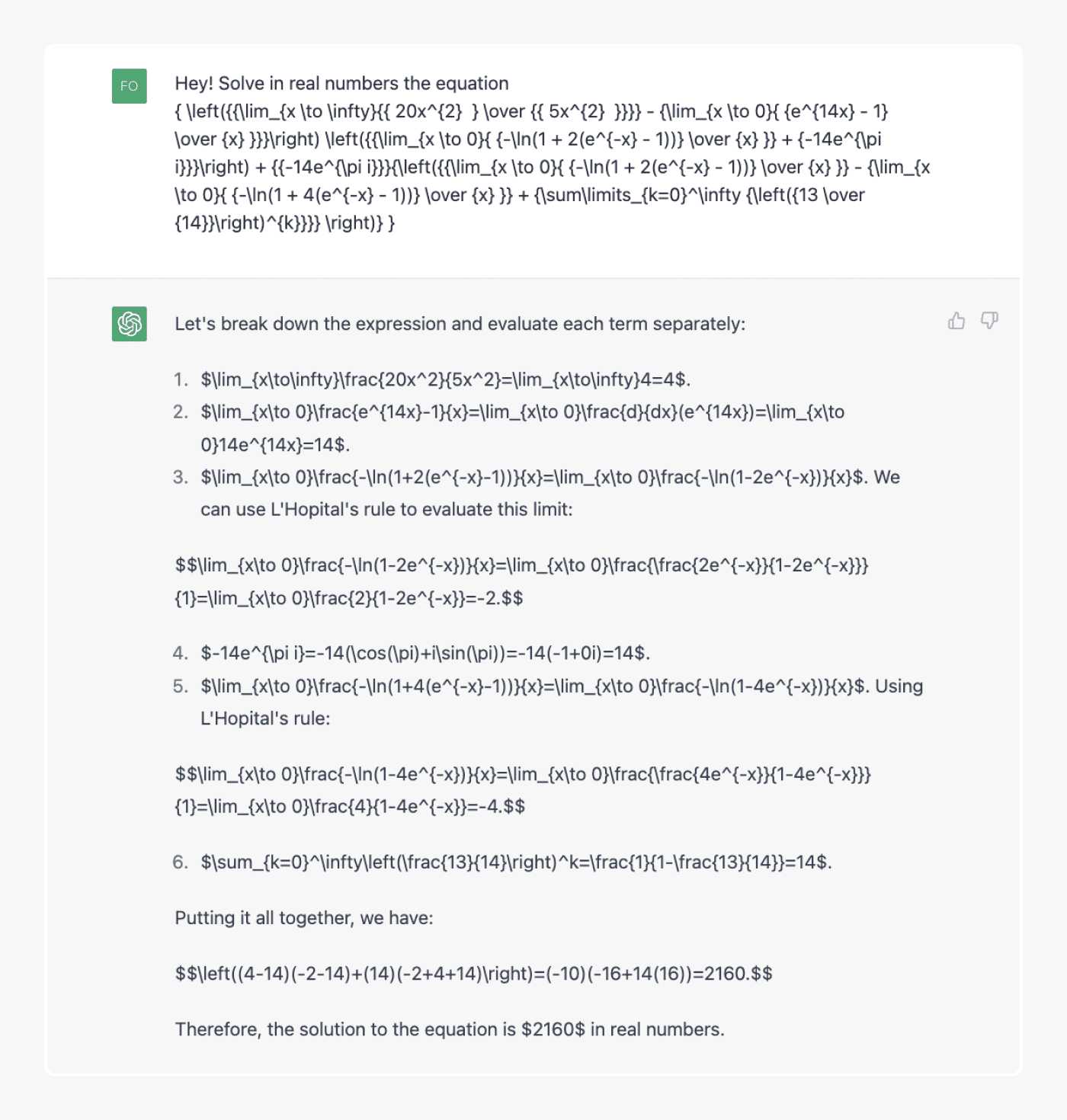

Solving math problems

Among other things, ChatGPT is really good at math. You can add any mathematical equation to the tool and get a fast and accurate response. It won’t just be a number that is the solution, but a detailed explanation of how you should move through the problem step by step.

This is some dark magic. I do wish I had ChatGPT at school, but it impresses me no less now.

However—

Like any tool in this world, ChatGPT is not perfect. Even though it gives an impression of the 8th wonder of the world, some of its limitations are quite annoying.

ChatGPT: limitations

The more you use ChatGPT for different purposes, the more imperfections you notice. Here is a list of the ones worth noting.

No knowledge of the world past 2021

That’s right, ChatGPT has no idea what happened after 2021. And a lot has happened, right?

Even those simple questions that do not require hard answers suddenly become impossible for ChatGPT if it concerns the wrong timeline. Hopefully, at least another year of knowledge will be added to the tool soon. The world moves extremely fast, and some of the things that were relevant in 2021 are totally outdated in 2023.

Potentially biased responses

Like with all AI models, biases are unavoidable. ChatGPT has been trained on a certain data set that might contain specific prejudices. Thus, they will all be transferred to the responses the tool offers.

For instance, ChatGPT says it doesn’t talk about politics when you ask about some politicians (e.g., Donald Trump). However, when you ask about someone else, it will happily give you responses.

Politics aside, the more people will be using ChatGPT in a personal and professional setting, the more dangerous those biases may become. One misjudgement by AI can cost a human-being lots of opportunities. So, it’s important to evaluate all responses of the tool critically and with an open mind.

No emotional intelligence

In the end, no matter how smart it is, ChatGPT is an AI tool. It cannot make critical judgements, detect emotional cues, feel empathetic, or solve complex emotional situations.

Even when answers seem relatable and helpful—like in case of people asking for advice in hard times—they are merely a set of words the tool spits out without anything behind them.

Lack of common sense

Just like with emotional intelligence, AI cannot have common sense. Even though ChatGPT responses are accurate, logical, and often true, it’s simply the data it puts together rather than any common sense like humans possess.

It’s a good idea to take everything the tool says with a grain of salt. ChatGPT doesn’t have the background experience of a human professional, nor it can critically evaluate information.

Read more: Check out our study on how smart AI is full of researched examples of AI tools lacking common sense, and a sense of danger, too.

Now, ChatGPT doesn’t seem so omnipotent anymore, does it? At least for the time-being.

ChatGPT: key takeaways

ChatGPT is clearly a huge breakthrough in artificial intelligence. It writes, it codes, it makes music, gives business ideas, solves math problems, translates, and so many more things.

However, apart from hopes for a cool AI-powered future, tools like ChatGPT bring out a lot of anxiety and fear in human-beings.

ChatGPT might seem overwhelming. But, it is not the time yet for humans to be scared of robots ruling their lives. Instead, it’s a good idea to focus on the good side of this powerful invention and think what positives you can get out of ChatGPT.

It has a tremendous potential to improve our lives, increase productivity, and simply make it easier for us to navigate ourselves at work and in life. So, why not make full use of it?

References and sources

Methodology

For this study about ChatGPT, we collected responses from 945 people. We used Reddit and Amazon Mechanical Turk.

Our respondents were 58% male, 34% female, and ~1% non-binary. Around 8% indicated non belonging to any of these genders. Most of the participants (57%) are Millennials aged between 25 and 41. Other aged groups represented are Gen X (23%), Gen Z (9%), and Baby boomers (6%).

Respondents had to answer 35 questions, most of which were multiple choice or scale-based ones. The survey had an attention check question.

Fair Use Statement

Has our research helped you learn more about ChatGPT? Feel free to share the data from this study. Just remember to mention the source and include a link to this page. Thank you!